Skinput Technology

Published on Aug 15, 2016

Abstract

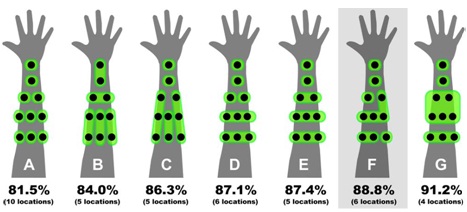

The Microsoft company have developed Skinput , a technology that appropriates the human body for acoustic transmission, allowing the skin to be used as an input surface. In particular, we resolve the location of finger taps on the arm and hand by analyzing mechanical vibrations that propagate through the body.

We collect these signals using a novel array of sensors worn as an armband. This approach provides an always available, naturally portable, and on-body finger input system. We assess the capabilities, accuracy and limitations of our technique through a two-part, twenty-participant user study. To further illustrate the utility of our approach, we conclude with several proof-of-concept applications we developed

Introduction of Skinput Technology

The primary goal of Skinput is to provide an alwaysavailable mobile input system - that is, an input system that does not require a user to carry or pick up a device. A number of alternative approaches have been proposed that operate in this space. Techniques based on computer vision are popular These, however, are computationally expensive and error prone in mobile scenarios (where, e.g., non-input optical flow is prevalent). Speech input is a logical choice for always-available input, but is limited in its precision in unpredictable acoustic environments, and suffers from privacy and scalability issues in shared environments. Other approaches have taken the form of wearable computing.

This typically involves a physical input device built in a form considered to be part of one's clothing. For example, glove-based input systems allow users to retain most of their natural hand movements, but are cumbersome, uncomfortable, and disruptive to tactile sensation. Post and Orth present a "smart fabric" system that embeds sensors and conductors into abric, but taking this approach to always-available input necessitates embedding technology in all clothing, which would be prohibitively complex and expensive.

The SixthSense project proposes a mobile, alwaysavailable input/output capability by combining projected information with a color-marker-based vision tracking system. This approach is feasible, but suffers from serious occlusion and accuracy limitations. For example, determining whether, e.g., a finger has tapped a button, or is merely hovering above it, is extraordinarily difficult

Bio-Sensing :

Skinput leverages the natural acoustic conduction properties of the human body to provide an input system, and is thus related to previous work in the use of biological signals for computer input. Signals traditionally used for diagnostic medicine, such as heart rate and skin resistance, have been appropriated for assessing a user's emotional state. These features are generally subconsciouslydriven and cannot be controlled with sufficient precision for direct input. Similarly, brain sensing technologies such as electroencephalography (EEG) & functional near-infrared spectroscopy (fNIR) have been used by HCI researchers to assess cognitive and emotional state; this work also primarily looked at involuntary signals.

In contrast, brain signals have been harnessed as a direct input for use by paralyzed patients, but direct brain computer interfaces (BCIs) still lack the bandwidth requiredfor everyday computing tasks, and require levels of focus, training, and concentration that are incompatible with typical computer interaction.

There has been less work relating to the intersection of finger input and biological signals. Researchers have harnessed the electrical signals generated by muscle activation during normal hand movement through electromyography (EMG). At present, however, this approach typically requires expensive amplification systems and the application of conductive gel for effective signal acquisition, which would limit the acceptability of this approach for most users. The input technology most related to our own is that of Amento et al who placed contact microphones on a user's wrist to assess finger movement. However, this work was never formally evaluated, as is constrained to finger motions in one hand.

The Hambone system employs a similar setup, and through an HMM, yields classification accuracies around 90% for four gestures (e.g., raise heels, snap fingers). Performance of false positive rejection remains untested in both systems at present. Moreover, both techniques required the placement of sensors near the area of interaction (e.g., the wrist), increasing the degree of invasiveness and visibility. Finally, bone conduction microphones and headphones - now common consumer technologies - represent an additional bio-sensing technology that is relevant to the present work. These leverage the fact that sound frequencies relevant to human speech propagate well through bone.

Bone conduction microphones are typically worn near the ear, where they can sense vibrations propagating from the mouth and larynx during speech. Bone conduction headphones send sound through the bones of the skull and jaw directly to the inner ear, bypassing transmission of sound through the air and outer ear, leaving an unobstructed path for environmental sounds