iMouse

Published on Nov 23, 2015

Abstract

The remarkable advances of micro sensing micro electromechanical systems (MEMS) and wireless communication technologies have promoted the development of wireless sensor networks. A WSN consists of many sensor nodes densely deployed in a field, each able to collect environmental information and together able to support multihop ad-hoc routing.

WSNs provide an inexpensive and convenient way to monitor physical environments.

With their environment-sensing capability, WSNs can enrich human life in applications such as healthcare, building monitoring, and home security. A wireless sensor network (WSN) is a wireless network consisting of spatially distributed autonomous devices using sensors to cooperatively monitor physical or environmental conditions, such as temperature, sound, vibration, pressure, motion or pollutants, at different locations.

The development of wireless sensor networks was originally motivated by military applications such as battlefield surveillance. However, wireless sensor networks are now used in many civilian application areas, including environment and habitat monitoring, healthcare applications, home automation, and traffic control. The applications for WSNs are many and varied. They are used in commercial and industrial applications to monitor data that would be difficult or expensive to monitor using wired sensors. They could be deployed in wilderness areas, where they would remain for many years (monitoring some environmental variables) without the need to recharge/replace their power supplies. They could form a perimeter about a property and monitor the progression of intruders (passing information from one node to the next).

Related Work In Wireless Surveillance

Traditional visual surveillance systems continuously videotape scenes to capture transient or suspicious objects. Such systems typically need to automatically interpret the scenes and understand or predict actions of observed objects from the acquired videos. For example, a video-based surveillance network in which an 802.11 WLAN card transmits the information that each video camera captures. Researchers in robotics have also discussed the surveillance issue. Robots or cameras installed on walls identify obstacles or humans in the environment. These systems guide robots around these obstacles.

Such systems normally must extract meaningful information from massive visual data, which requires significant computation or manpower. Some researchers use static WSNs for object tracking. These systems assume that objects can emit signals that sensors can track. However, results reported from a WSN are typically brief and lack in-depth information. Edoardo Ardizzone and his colleagues propose a video-based surveillance system for capturing intrusions by merging WSNs and video processing techniques.

The system complements data from WSNs with videos to capture the possible scenes with intruders. However, cameras in this system lack mobility, so they can only monitor some locations. Researchers have also proposed mobilizers to move sensors to enhance coverage of the sensing field and to strengthen the network connectivity. The integration of WSNs with surveillance systems has not well addressed, which led to propose the iMouse system.

iMouse System Architecture

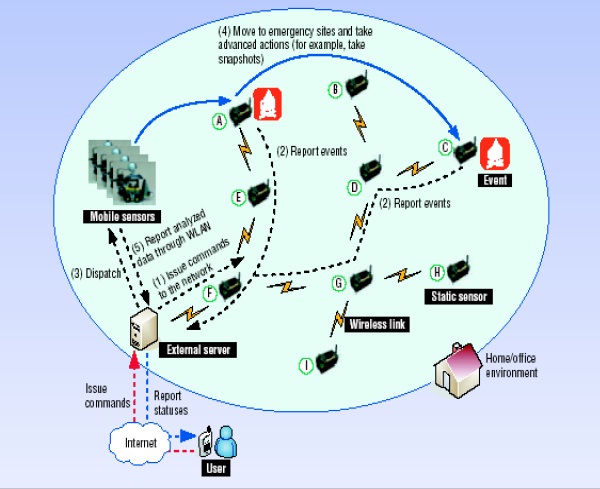

Figure 1 show the iMouse architecture. The three main components of the iMouse system architecture are:

· Static sensors

· Mobile sensors

· External server.

The following steps show the operations that are performed in figure1.

(1) The user issues commands to the network through the server.

(2) Static sensors monitor the environment and report events.

(3) When notified of an unusual event, the server notifies the user and dispatches mobile sensors.

(4) The mobile sensor moves to the emergency sites and collect data.

(5) The mobile sensor report back to the server after collecting data.

The static sensors form a WSN to monitor the environment and notify the server of unusual events.

Each static sensor comprises a sensing board and a mote for communication. In our current prototype, the sensing board can collect three types of data: light, sound, and temperature. We assume that the sensors are in known locations, which users can establish through manual setting, GPS, or any localization schemes. An event occurs when the sensory input is higher or lower than a predefined threshold. Sensors can combine inputs to define a new event. For example, a sensor can interpret a combination of light and temperature readings as a potential fire emergency. To detect an explosion, a sensor can use a combination of temperature and sound readings. Or, for home security, it can use an unusual sound or light reading.

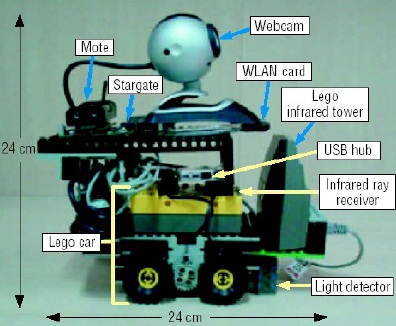

To conserve static sensors’ energy, event reporting is reactive. Mobile sensors can move to event locations, exchange messages with other sensors, take snapshots of event scenes, and transmit images to the server. As Figure 2 shows, each mobile sensor is equipped with a Stargate processing board, which is connected to the following:

· A Lego car, to support mobility;

· A mote, to communicate with the static sensors;

· A web cam, to take snapshots; and

· An IEEE 802.11 WLAN card, to support high-speed, long-distance communications, such as transmitting images.

The Stargate controls the movement of the Lego car and the web cam.

System operations and control flows

To illustrate how iMouse works, we use a fire emergency scenario, as Figure 1 shows. On receiving the server’s command, the static sensors form a treelike network to collect sensing data. Suppose static sensors A and C report unusually high temperatures, which the server suspects to indicate a fire emergency in the sensors’ neighborhoods. The server notifies the users and dispatches mobile sensors to visit the sites. On visiting A and C, the mobile sensors take snapshots and perform in-depth analyses. For example, the reported images might indicate the fire’s source or identify inflammable material in the vicinity and locate people left in the building.

Conclusion and Future Scope

The proposed iMouse integrates WSN technologies into surveillance technologies to support intelligent mobile surveillance services. On one hand, these mobile sensors can help improve the weakness of traditional WSNs that they only provide rough environmental information of the sensing field. By including mobile cameras, we can obtain much richer context information to conduct more in-depth analysis.

On the other hand, surveillance can be done in an event-driven manner. Thus, the weakness of traditional surveillance systems can be greatly improved because only critical context information is retrieved and proactively sent to users. The prototyped iMouse system can be improved/extended in several ways. First, the way to navigate mobile sensors can be further improved. For example, localization schemes can be integrated to guide mobile sensors instead of using color tapes. Second, the coordination among mobile sensors, especially when they are on-the-road, can be exploited. Third, how to utilize mobile sensors to improve the network topology deserves further investigation.

References

· http://doi.ieeecomputersociety.org/10.1109/MC.2007.211

· http://en.wikipedia.org/wiki/Sensor_node

· http://en.wikipedia.org/wiki/Wireless_sensor_network

· http://blog.xbow.com/xblog/2007/06/imouse---integr.html

· http://www.typepad.com/t/trackback/2327202/19587392